CS3 In the News

Peter Dizikes, MIT News Office

The structure of the auditing business appears problematic: Typically, major companies pay auditors to examine their books under the so-called “third-party” audit system. But when an auditing firm’s revenues come directly from its clients, the auditors have an incentive not to deliver bad news to them.

So: Does this arrangement affect the actual performance of auditors?

In an eye-opening experiment involving roughly 500 industrial plants in the state of Gujarat, in western India, changing the auditing system has indeed produced dramatically different outcomes — reducing pollution, and more generally calling into question the whole practice of letting firms pay the auditors who scrutinize them.

“There is a fundamental conflict of interest in the way auditing markets are set up around the world,” says MIT economist Michael Greenstone, one of the co-authors of the study, whose findings are published today in the Quarterly Journal of Economics. “We suggested some reforms to remove the conflict of interest, officials in Gujarat implemented them, and it produced notable results.”

The two-year experiment was conducted by MIT and Harvard University researchers along with the Gujarat Pollution Control Board (GPCB). It found that randomly assigning auditors to plants, paying auditors from central funds, double-checking their work, and rewarding the auditors for accuracy had large effects. Among other things, the project revealed that 59 percent of the plants were actually violating India’s laws on particulate emissions, but only 7 percent of the plants were cited for this offense when standard audits were used.

Across all types of pollutants, 29 percent of audits, using the standard practice, wrongly reported that emissions were below legal levels.

The study also produced real-world effects: The state used the information to enforce its pollution laws, and within six months, air and water pollution from the plants receiving the new form of audit were significantly lower than at plants assessed using the traditional method.

The co-authors of the paper are Greenstone, the 3M Professor of Environmental Economics at MIT; Esther Duflo, the Abdul Latif Jameel Professor of Poverty Alleviation and Development Economics at MIT; Rohini Pande, a professor of public policy at the Harvard Kennedy School; and Nicholas Ryan PhD ’12, now a visiting postdoc at Harvard.

The power of random assignment

The experiment involved 473 industrial plants in two parts of Gujarat, which has a large manufacturing industry. Since 1996 the GPCB has used the third-party audit system, in which auditors check air and water pollution levels three times annually, then submit a yearly report to the GPCB.

To conduct the study, 233 of the plants tried a new arrangement: Instead of auditors being hired by the companies running the power plants, the GPCB randomly assigned them to plants in this group. The auditors were paid fixed fees from a pool of money; 20 percent of their audits were randomly chosen for re-examination. Finally, the auditors received incentive payments for accurate reports.

In comparing the 233 plants using the new method with the 240 using the standard practice, the researchers uncovered that almost 75 percent of traditional audits reported particulate-matter emissions just below the legal limit; using the randomized method, only 19 percent of plants fell in that narrow band.

All told, across several different air- and water-pollution measures, inaccurate reports of plants complying with the law dropped by about 80 percent when the randomized method was employed.

The researchers emphasize that the experiment enabled the real-world follow-up to occur.

“The ultimate hope with the experiment was definitely to see pollution at the firm level drop,” Duflo says. The state’s enforcement was effective, as Pande explains, partly because “it becomes cheaper for some of the more egregious pollution violators to reduce pollution levels than to attempt to persuade auditors to falsify reports.”

According to Ryan, the Gujarat case also dispels myths about the difficulty of enforcing laws, since the experiment “shows the government has credibility and will.”

But how general is the finding?

In the paper, the authors broaden their critique of the audit system, referring to standard corporate financial reports and the global debt-rating system as other areas where auditors have skewed auditing incentives. Still, it is an open question how broadly the current study’s findings can be generalized.

“It would be a mistake to assume that quarterly financial reports for public companies in the U.S. are exactly the same as pollution reports in Gujarat, India,” Greenstone acknowledges. “But one thing I do know is that these markets were all set up with an obvious fundamental flaw — they all have the feature that the auditors are paid by the firms who have a stake in the outcome of the audit.”

Some scholars of finance say the study deserves wide dissemination.

“This is a wonderful paper,” says Andrew Metrick, a professor and deputy dean at the Yale School of Management. “It is a very strong piece of evidence that, in the context they studied, random assignment produces unbiased results. And I think it’s broadly applicable.”

Indeed, Metrick says he may make the paper required reading in a new program Yale established this year that provides research and training for financial regulators from around the world.

To be sure, many large corporations have complicated operations that cannot be audited in the manner of emissions; in those cases, a counterargument goes, retaining the same auditor who knows the firm well may be a better practice. But Metrick suggests that in such cases, auditors could be randomly assigned to firms for, say, five-year periods. At a minimum, he notes, the Dodd-Frank law on financial regulation mandates further study of these issues.

Greenstone also says he hopes the current finding will spur related experiments, and gain notice among regulators and policymakers.

“No one has really had the political will to do something about this,” Greenstone says. “Now we have some evidence.”

The study was funded by the Center for Energy and Environmental Policy Research, the Harvard Environmental Economics Program, the International Growth Centre, the International Initiative for Impact Evaluation, the National Science Foundation and the Sustainability Science Program at Harvard.

The Sverdrup Gold Medal is "granted to researchers who make outstanding contributions to the scientific knowledge of interactions between the oceans and the atmosphere." The award, in the form of a medallion, will be presented at the AMS Annual Meeting to be held on 2–6 February 2014 in Atlanta, GA.

John Marshall is an oceanographer with broad interests in climate and the general circulation of the atmosphere and oceans, which he studies through the development of mathematical and numerical models of physical and biogeochemical processes. His research has focused on problems of ocean circulation involving interactions between motions on different scales, using theory, laboratory experiments, and observations as well as innovative approaches to global ocean modeling pioneered by his group at MIT.

Current research foci include: ocean convection and subduction, stirring and mixing in the ocean, eddy dynamics and the Antarctic Circumpolar Current, the role of the ocean in climate, climate dynamics, aquaplanets.

Professor Marshall received his PhD in atmospheric sciences from Imperial College, London in 1980. He joined EAPS in 1991 as an associate professor and has been a professor in the department since 1993. He was elected a Fellow of the Royal Society in 2008. He is coordinator of Oceans at MIT, a new umbrella organization dedicated to all things related to the ocean across the Institute, and director of MIT’s Climate Modeling Initiative (CMI)

China’s deployment of renewable electricity generation – starting with hydropower, then wind, and now biomass and solar – is massive. China leads the world in installed renewable energy capacity (both including and excluding hydro) and has sustained annual wind additions in excess of 10 gigawatts (10 GW) for four straight years. Half of the hydropower installed worldwide last year was in China. And solar and biomass-fired electricity are expected to grow ten-fold over the period 2010-2020. Most striking amidst all these impressive accomplishments has been the Chinese government’s seemingly unwavering financial support for renewable energy generators even as other countries scale back or restructure similar support programs.

The balance sheets of the central renewable energy fund are changing, however. Supplied primarily through a fixed surcharge on all electricity purchases, it has faced increasing shortfalls in recent years as renewable growth picked up, which may have contributed to late or non-payment to generators. Especially as more costly solar comes online, both the revenue streams and subsidy outlays to generators will require difficult modifications to keep the fund solvent. More broadly, investment decisions are largely influenced by the historically high penetration of state-owned energy companies in the renewables sector, which have responsibilities to the state besides turning a profit.

Recognizing these challenges of solvency and efficiency, the central government is facing a crossroads in its policy support for renewable sector, of which one possible approach would be migrating to a hybrid system of generation subsidies coupled with mandatory renewable portfolio standards (RPS). This fourth and final post in the Transforming China’s Grid series looks out to 2020 at how China’s renewable energy policies may evolve and how they must evolve to ensure strong growth in the share of renewable energy in the power mix.

Policy Support to Date

Investment in renewable energy has risen steadily in China over the last decade, with the wind and solar sectors hitting a record $68 billion in 2012, according to Bloomberg New Energy Finance (BNEF). These sums – together with massive state-led investments in hydropower – have translated into a surge of renewable energy capacity, which since 2006 included annual wind capacity additions of 10-15 GW and a near doubling of hydropower (see graph). Renewables now provide more than a quarter of China’s electricity generating capacity.

Early on in both the wind and solar sectors, the tariffs paid to generators were determined by auction in designated resource development areas (called concessions). These auctions underwent a number of iterations to get at rates the market will bear before policy support was transitioned to the fixed regional feed-in-tariffs currently in place: 0.51-0.61 yuan / kWh (8.3-10.0 US¢ / kWh) for wind, and 0.90-1.00 yuan / kWh (15-16 US¢ / kWh) for solar. The result of this methodical policy evolution was the steady growth of wind and solar power capacity year-after-year. Contrast these with the uneven capacity additions of wind in the U.S., attributable to the haphazard boom-bust cycles in U.S. wind policy (see graph). Hydropower project planning is directed by the government and rates are set project-by-project (typically lower than the wind or solar FITs).

Also important to developers – thought not captured in BNEF’s investment totals – are reduced value-added-taxes on renewable energy projects, preferential land and loan terms, as well as significant transmission projects serving renewable power bases socialized across all ratepayers. On the manufacturing side, the government has also stepped in to prop up and consolidate key solar companies.

Guiding these policies has been continued ratcheting up of capacity targets beginning with the Medium to Long-Term Renewable Energy Plan in 2007. These national goals – while not legally binding – shape sectoral policies and encourage local officials to go the extra mile in support of these types of projects. The most recent iterations call for 104 GW of wind, 260 GW of hydro, and 35 GW of solar installed and grid-connected by 2015 (see table). In addition to these “soft” pushes, generators with over 5 GW of capacity were required under the 2007 plan to reach specified capacity targets for non-hydro renewables: 3% by 2010 and 8% by 2020. However, there appeared to be no penalty for non-compliance: half of the companies missed their 2010 mandatory market share targets.

China’ renewable energy targets as of September 2013

(GW, grid-connected)

|

|

2012 Actuala |

2015 Goal |

2020 Goal |

|

Windb |

62 |

104 |

200 |

|

Hydroc |

249 |

290 |

420 |

|

Solard |

3 |

35e |

50 |

|

Biomass |

4 |

13f |

30g |

Rubber Missing the Road in Generation

Amidst the backdrop of impressive capacity additions, a separate story has unfolded with respect to generation. Wind in China faces twin challenges of connection and curtailment, as I outlined previously, which result in much lower capacity factors than wind turbines abroad. These have persisted for several years, so one might think that wise developers would demand higher tariffs before investing and a new, lower equilibrium would be established.

But the incentives to invest in China’s power sector are rarely based on economics alone. The vast majority of wind projects are developed by larger, state-owned enterprises (SOEs). In recent years, SOEs have been responsible for as much as 90% of wind capacity installed (for comparison, SOE’s are responsible for an average of 70% for the overall power mix). In 2011, the top 10 wind developers were all SOEs which faced some scrutiny under the 2010 mandatory share requirements because of their size. In addition, because generators only faced a capacity requirement, it was more important to get the turbines in the ground than get them spinning right away (though as we saw, many still missed their capacity targets). Grid companies, on the other hand, had generation targets (1% by 2010 and 3% by 2020), which were also unmet in some locations. The next round of policies have sought to address both generation and connection issues.

Other Cracks in the Support Structure

Though generation lagged capacity, it was still growing much faster than predicted, leading to shortfalls in funds to pay the feed-in-tariff. A single surcharge on all electricity purchases supplies the centrally-administered renewable energy fund, which fell short by 1.4 billion yuan ($200 million) in 2010 and 22 billion yuan ($3.4 billion) in 2011. Prior to the recent surcharge rise, some estimated the shortfall will rise to 80 billion yuan ($14 billion) by 2015. The difference would either not make it to developers or have to be appropriated from elsewhere.

In addition, from 2010-2012, there were long delays in reimbursing generators their premium under the FIT. The situation was so serious that the central planning ministry, the National Development and Reform Commission (NDRC), put out a notice in 2012 demanding grid companies pay the two-year old backlog. These receivables issues are particularly damaging to wind developers who operate on slim margins and need equity to invest in new projects.

To address the solvency of the renewable energy fund, in August, the NDRC doubled the electricity surcharge on industrial customers to 0.015 yuan / kWh (0.25 US¢ / kWh), keeping the residential and agriculture surcharge at 0.008 yuan / kWh (0.13 US¢ / kWh) (Chinese announcement). With a little over three-quarters of electricity going to industry, this will increase substantially the contributions to the fund. At the same time, solar FITs were scaled back slightly by instituting a regional three-tier system akin to that developed for wind: sunny but remote areas in the north and northwest offer 0.90-0.95 yuan / kWh (15-15.5 US¢ / kWh) while eastern and southern provinces close to load centers but with lower quality resources offer 1 yuan / kWh (16 US¢ / kWh) (Chinese announcement).

Additionally, distributed solar electricity consumed on-site (which could be anything from rooftops to factories with panels) will receive a 0.42 yuan / kWh (6.9 US¢ / kWh) subsidy. Excess electricity sold back on the grid, where grid connections and policy are in place, will be at the prevailing coal tariff, ranging from 0.3-0.5 yuan / kWh (5-8 US¢ / kWh). It is unclear if these adjustments will mitigate the expected large financial demands to support solar (whose FIT outlays per kWh are still more than double wind).

Wind, whose FIT has been in place since 2009, may not be immune to this restructuring either. Some cite the falling cost of wind equipment and the fund gap as cause for scaling back wind subsidies.

Where to Go From Here

Despite this budget squeeze, the Chinese government seems intent on sustaining the clean energy push. Even as it weakens financial incentives for renewable energy, the central government is getting smarter about how to achieve its long-term clean energy targets. Last year the National Energy Administration (NEA) released draft renewable portfolio standards (RPS), which would replace the mandatory share program with a tighter target focused on generation: an average of 6.5% from non-hydro renewables by 2015. Grid companies will have purchase requirements ranging from 3% to 15%, and provincial consumption targets range from 1% to 15% (more details here, subscription req’d). This approach appropriately recognizes the myriad regulatory barriers to increasing wind uptake by putting responsibility for meeting targets on all stakeholders.

China is paving new ground as it shifts further toward low-carbon sources of electricity. What has worked in the past, when wind and solar’s contributions to China’s energy mix were minor, will likely not be sufficient to meet cost constraints and integration challenges out to 2020. As with all policies in China, designing the policy is less than half the battle; implementation and enforcement are central to changing to the status quo.

Environmental controls designed to prevent leaks of methane from newly drilled natural gas wells are effective, a study has found — but emissions from existing wells in production are much higher than previously believed.

The findings, reported today in the Proceedings of the National Academy of Sciences1, add to a burgeoning debate over the climate impact of replacing oil- and coal-fired power plants with those fuelled by natural gas. Significant leaks of heat-trapping methane from natural gas production sites would erase any climate advantage the fuel offers.

One concern is the potential release of methane during hydraulic fracturing, or 'fracking', which uses injections of high-pressure fluids to shatter rock and release trapped gas. Before production can commence, the well must be 'completed' by removal of the fracking fluids, which contain gas that can escape to the air.

To test the effectiveness of current controls, the researchers installed emissions-monitoring equipment at 27 wells during their completions in 2012 and 2013. Their results suggest that current controls reduce emissions in such wells by 99% compared to sites where the technology is not used, says lead author David Allen, an engineer at the University of Texas in Austin.

The researchers' estimate of annual emissions from wells undergoing completion, 18,000 tonnes per year, is also roughly 97% less than the estimate given in 2011 by the US Environmental Protection Agency (EPA).

Less encouraging was what the team discovered at 150 other well sites that were already producing natural gas. Such wells often use pneumatic controllers, which siphon off pressurized natural gas from the well and use it to operate production-related equipment. "As part of their normal operation, they emit methane into the atmosphere," Allen says.

His team's work suggests that emissions from pneumatic controllers and other equipment at production wells is between 57-67% higher than the current EPA estimate. However, the study also finds total methane emissions from all phases of natural gas production to be about 2.3 million tonnes per year, about 10% lower than the EPA estimate of 2.5 million tonnes…More.

Henry Jacoby, an economist and former director of the Joint Program on the Science and Policy of Global Change at Massachusetts Institute of Technology in Cambridge, agrees. "This is important work," he says, "but the great bulk of the problem is elsewhere, downstream in the natural gas system", including poorly capped oil and gas wells no longer in production.

Read the complete article here.

Reprinted by permission from Macmillan Publishers Ltd: Nature (doi:10.1038/nature.2013.13748), Copyright 2013.

Photo Credit: Steve Starr/Corbis

By Chris Knittel and John Parsons

Professor Robert Pindyck has a new working paper (CEEPR-WP-13-XXX) that has attracted a good share of attention since it steps into the highly charged debate on the reliability of research related to climate change. But in this case, the focus is on what we learn from one class of economic model, the so-called integrated assessment models (IAM). These models have been used to arrive at a “social cost of carbon” (SCC). For example, in 2010 a U.S. Government Interagency Working Group recommended a $21/t CO2 as the social cost of carbon to be employed by US agencies in conducting cost-benefit analyses of proposed rules and regulations. This figure was recently updated to $33/t. Professor Pindyck’s paper calls attention to the wide, wide range of uncertainty surrounding key inputs to IAM models, and to the paucity of reliable empirical data for narrowing the reasonable range of input choices. The paper then suggests profitable directions for reorienting future research and analysis.

Reflecting the highly charged nature of the U.S. political debate on climate change, Professor Pindyck’s paper has been seized on by opponents of action. In particular, certain blogs have cited his paper in support of their campaign against any action. Here is one example—link.

Interestingly, Professor Pindyck is an advocate of action on climate change, such as leveling a carbon tax. So his own view of the implications of his research are quite different than that of those who oppose any action. This post at the blog of the Natural Resources Defense Council includes more extensive comments by Professor Pindyck on the debate—link.

An alternative approach is to think about Professor Pindyck’s review as a guide for future research on the costs of climate change which is better focused to address the important uncertainties in a way that can better contribute to public discussion and analysis. CEEPR researcher Dr. John Parsons emphasizes this point in his blog post about Pindyck’s paper—link.

More...

Jennifer Chu, MIT News Office

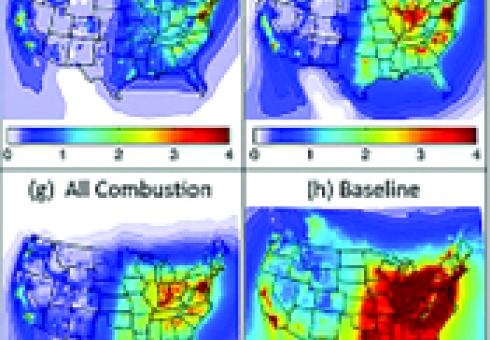

Researchers from MIT’s Laboratory for Aviation and the Environment have come out with some sobering new data on air pollution’s impact on Americans’ health.

The group tracked ground-level emissions from sources such as industrial smokestacks, vehicle tailpipes, marine and rail operations, and commercial and residential heating throughout the United States, and found that such air pollution causes about 200,000 early deaths each year. Emissions from road transportation are the most significant contributor, causing 53,000 premature deaths, followed closely by power generation, with 52,000.

In a state-by-state analysis, the researchers found that California suffers the worst health impacts from air pollution, with about 21,000 early deaths annually, mostly attributed to road transportation and to commercial and residential emissions from heating and cooking.

The researchers also mapped local emissions in 5,695 U.S. cities, finding the highest emissions-related mortality rate in Baltimore, where 130 out of every 100,000 residents likely die in a given year due to long-term exposure to air pollution.

“In the past five to 10 years, the evidence linking air-pollution exposure to risk of early death has really solidified and gained scientific and political traction,” says Steven Barrett, an assistant professor of aeronautics and astronautics at MIT. “There’s a realization that air pollution is a major problem in any city, and there’s a desire to do something about it.”

Barrett and his colleagues have published their results in the journal Atmospheric Environment.

Data divided

Barrett says that a person who dies from an air pollution-related cause typically dies about a decade earlier than he or she otherwise might have. To determine the number of early deaths from air pollution, the team first obtained emissions data from the Environmental Protection Agency’s National Emissions Inventory, a catalog of emissions sources nationwide. The researchers collected data from the year 2005, the most recent data available at the time of the study.

They then divided the data into six emissions sectors: electric power generation; industry; commercial and residential sources; road transportation; marine transportation; and rail transportation. Barrett’s team fed the emissions data from all six sources into an air-quality simulation of the impact of emissions on particles and gases in the atmosphere.

To see where emissions had the greatest impact, they removed each sector of interest from the simulation and observed the difference in pollutant concentrations. The team then overlaid the resulting pollutant data on population-density maps of the United States to observe which populations were most exposed to pollution from each source.

Health impacts sector by sector

The greatest number of emissions-related premature deaths came from road transportation, with 53,000 early deaths per year attributed to exhaust from the tailpipes of cars and trucks.

“It was surprising to me just how significant road transportation was,” Barrett observes, “especially when you imagine [that] coal-fired power stations are burning relatively dirty fuel.”

One explanation may be that vehicles tend to travel in populated areas, increasing large populations’ pollution exposure, whereas power plants are generally located far from most populations and their emissions are deposited at a higher altitude.

Pollution from electricity generation still accounted for 52,000 premature deaths annually. The largest impact was seen in the east-central United States and in the Midwest: Eastern power plants tend to use coal with higher sulfur content than Western plants.

Unsurprisingly, most premature deaths due to commercial and residential pollution sources, such as heating and cooking emissions, occurred in densely populated regions along the East and West coasts. Pollution from industrial activities was highest in the Midwest, roughly between Chicago and Detroit, as well as around Philadelphia, Atlanta and Los Angeles. Industrial emissions also peaked along the Gulf Coast region, possibly due to the proximity of the largest oil refineries in the United States.

Southern California saw the largest health impact from marine-derived pollution, such as from shipping and port activities, with 3,500 related early deaths. Emissions-related deaths from rail activities were comparatively slight, and spread uniformly across the east-central part of the country and the Midwest.

By Genevieve Wanucha

At “Debating the Future of Solar Geoengineering,” a debate hosted last week by the MIT Joint Program on the Science and Policy of Global Change, four leading thinkers in geoengineering laid out their perspectives on doctoring our atmosphere to prevent climate emergency. The evening featured Stephen Gardiner of the University of Washington, David Keith of Harvard University, Alan Robock of Rutgers University, and Daniel Schrag of Harvard University. Oliver Morton from The Economist ran the show as a deft and witty moderator.

The debate focused on the easiest, fastest, and cheapest geoengineering option on the table: solar radiation management. This technique would involve the intentional injection of sulfate aerosols into the Earth’s upper atmosphere, the stratosphere. These aerosols, the same particles released by volcanic eruptions, would reflect sunlight away from Earth, cool the planet, and, in theory, stabilize climate.

While climate modeling shows that solar radiation management would reduce risks for some people alive today, there are a number of reasons why this technique might be a bad idea, Alan Robock said. Pumping particles into the stratosphere could shift rainfall patterns and chew up the ozone layer, thus tinkering with the amount of water and UV light reaching human and ecological systems. “We are going to put the entire fate of the only planet we know that can sustain life on this one technical intervention that may go wrong?” he challenged.

Robock’s stance is what David Keith soon called “the very common, intuitive, and healthy reaction that geoengineering is ‘nuts’ and we should just get on with cutting emissions.” But Keith and Dan Shrag systematically picked the argument apart as they made the case that, even in the most optimistic of scenarios, we may not be able to solve the climate problem by acting on greenhouse gas emissions alone. For them, geoengineering is a real option.

Humans are burning enough fossil fuels to put 36 billion tons of CO2 into the air every year. And because the gas stays in the atmosphere for incredibly long time periods, we’re already committed to global warming far into the future. “Climate is going to get a lot worse before it gets better,” said Shrag. “We have to push for emissions reductions, but the world is going to put a lot more CO2 in the atmosphere, and we better figure out what to do about it.”

The debate was more nuanced than a “to geoengineer or not-to-geoengineer’ type of thing. Solar radiation management, Keith and Gardiner agreed, would not be ethical in the absence of a simultaneous reduction in CO2 emissions. As computer simulations by University of Washington researchers indicate, if we were to inject aerosols for a time, while continuing to emit carbon dioxide as usual, a sudden cessation of the technique for any reason would be disastrous. The aerosols would quickly fall to natural levels, and the planet would rapidly warm at a pace far too fast for humans, ecosystems, and crops to adapt.

“So if, as a result of decisions to implement solar engineering to reduce risks now, we do less to cut emissions and emit more than we otherwise would, then we are morally responsible for passing risk on to future generations,” said Keith.

Caveats to geoengineering continued to roll in during the Q&A. The technique would likely end up a dangerous catch-22 in the real world, according to Kyle Armour, postdoc in the MIT Department of Earth, Atmospheric and Planetary Sciences: “The case can be made that the times we would be most likely to use solar radiation management, such as in a climate emergency, are precisely the times when it would be most dangerous to do so.” In essence, implementing geoengineering to tackle unforeseen environmental disaster would entail a rushed response to a climate system we don’t understand with uncertain technology.

The post-debate reception buzzed with the general feeling that the panelists lacked enough “fire in their bellies.” “Debate? What debate?” asked Jim Anderson, Professor of Atmospheric Chemistry at Harvard. “I was expecting Italian parliament fisticuffs,” said Sarvesh Garimella, a graduate student in the MIT Department of Earth, Atmospheric and Planetary Sciences. Perhaps this was because, as several MIT graduate students noted, the debaters never touched the most fundamental research needed to evaluate the viability of geoengineering: aerosol effects on clouds.

The thing is, aerosols in the stratosphere do reflect sunlight and exert a cooling effect on Earth. “But they have to go somewhere,” said MIT’s Dan Cziczo, Associate Professor of Atmospheric Chemistry, who studies how aerosols, clouds, and solar radiation interact in Earth’s atmosphere. “Particles fall down into the troposphere where they can have many other effects on cloud formation, which have not been sorted out. They could cancel out any cooling we achieve, cool more than we anticipate, or even create warming,” Cziczo said. Indeed, the most recent Intergovernmental Panel on Climate Change (IPCC) report lists aerosol effects on clouds as the largest uncertainly in the climate system. “I don’t understand why you would attempt to undo the highly certain warming effect of greenhouse gases with the thing we are the least certain about.” For Cziczo, this is a non-starter.

The panelists largely acknowledged that we don’t understand the technique’s potential effects well enough to geoengineer today, but they have no plans to give up. Keith notes that a non-binding international memorandum laying out principles of transparency and risk assessment is needed now. And of course, vastly expanded research programs. “Before we go full scale,” said Keith as the debate came to a close, “we have to broaden far beyond the small clique of today’s geoengineering thinkers, but that doesn’t have to take decades.”

Watch the video here.

Four MIT students won first place in a competition by the U.S. Association of Energy Economics (USAEE) aimed at tackling today’s energy challenges and preparing solutions for policymakers and industry. The students, Ashwini Bharatkumar, Michael Craig, Daniel Cross-Call and Michael Davidson, competed against teams from other North American universities to develop a business model for a fictitious utility company in California facing uncertain electricity growth from a rise in electric vehicle charging.

“Overall, the case competition was a great opportunity to consider solutions to the very challenges that electric utilities are facing today,” says Bharatkumar.

With the goal of minimizing distribution system upgrade costs, the MIT team tested how well several business models or technology alternatives could address the utility company’s challenge. These included: implementing a real-time pricing and demand response program, using battery storage, using controlled charging, or some combination of the three.

The MIT students found that, instead of simply expanding the transmission and distribution network to accommodate the increased demand, the better course of action would be to install advanced metering infrastructure and implement controlled charging to shift the electric vehicle load to off-peak hours. They also recommended modifying the rate structure to include capacity – not just energy – costs. For example, grid users choosing to charge their vehicles during peak hours would incur an additional fee.

The team presented their recommendations at the annual USAEE and International Association for Energy Economics North American Conference in Anchorage, Alaska on July 29-31.

The MIT team’s presentation may be found here: http://www.usaee.org/usaee2013/submissions/Presentations/MIT_CaseComp_July29.ppt

Other presentations are available at: http://www.usaee.org/USAEE2013/program_concurrent.aspx

By Michael Davidson

Wind is China’s fastest growing renewable energy resource. In 2012, 13 gigawatts (GW) were added to the system, and incremental wind electricity production exceeded coal growth for the first time ever. In the same year, unused wind electricity hit record highs while wind not connected to the grid was roughly half the size of Germany’s fleet. China’s is perhaps the largest yet most inefficient wind power system in the world.

As a variable, diffuse and spatially segregated energy resource, wind has a number of disadvantages compared to centralized fossil stations. These unavoidable limitations are not unique to China, though they are magnified by its geography. In addition, as I outlined in a previous post, coal has uniquely shaped China’s power sector development and operation; these also play a role in limiting wind’s utilization. Eyeing ambitious 2020 renewable energy targets and beyond, policy-makers and grid operators are confronting a vexing decision: continue the status quo of rapidly expanding wind deployment while swallowing diminished capacity factors, or focus more on greater integration through targeted reforms.

Getting the Power to Market

Unlike other countries with varying political support for renewable energy, wind in China enjoys a privileged status. A well-funded feed-in-tariff (FIT) and other government support since 2006 encouraged an annual doubling of wind capacity for four consecutive years, followed by 10-15 GW additions thereafter. Wind projects are typically far from city and industrial centers where electricity is needed, however, and transmission investments to connect to the grid did not keep up pace. This remarkable gap left turbines – as many as a third of them in 2010 – languishing unconnected, unable to sell their electricity (see graph).

From Brazil to Germany, grid connection delays – primarily transformer and line right-of-way siting, permitting and construction – have occurred where there is rapid wind power development. China, however, had until mid-2011 a unique policy that exacerbated the wind-grid mismatch: all projects smaller than 50 MW could be approved directly by local governments, bypassing more rigorous feasibility analyses, in particular, related to grid access. The delay of central government reimbursement to overburdened local grids for construction may also be responsible. The level of non-grid connected capacity is hovering around 15 GW as of the end of 2012.

If you are a wind farm owner and have successfully connected to the grid, you might still face hurdles when trying to transmit your power to load centers. Grid operators make decisions a day ahead on which thermal plants to turn on, so if wind is significantly higher than forecasted 24 hours before, the difference may be curtailed (or “spilled”) to maintain grid stability. If wind is at the end of a congested transmission line, the grid operator may also have to curtail, as happens in ERCOT (Texas’ grid) and northwest China. Finally, to manage hourly variation, grid operators will accept wind as much as they can ramp up and down other generators to maintain supply and demand balance. The thermodynamics of fossil fuel plants place limits on this flexibility.

As with grid connection, China’s curtailment problems are much more severe than for its peers (see graph). The latest provincial figures, for 2011, pegged this at between 10~20%, and reports on 2012 show this skyrocketing to as high as 50% in some regions. By comparison, ERCOT peaked at 17% in 2009 and was 3.7% last year. This difference is largely, though not exclusively, attributable to two factors: China’s mix is coal-heavy which is more sluggish when changing output than, for example, natural gas. As I described before, the increased size of coal plants makes this effect more pronounced.

Secondly, since the Small Plant Closure Program began in 2006, new coal plants built to replace the aging fleet were preferentially designed as combined heat and power (CHP) to provide residential heating and industrial inputs where available, with the northeast seeing the highest penetration. Keeping homes warm during winter nights when wind blows the strongest effectively raises the minimum output on coal plants and reduces the space for wind. Following particularly high winter curtailment in 2012, China’s central planning agency, the National Development and Reform Commission (NDRC), began encouraging projects to divert excess wind to electric water heaters and displace some fraction of coal CHP. Given the capital investments required and the losses in conversion from electricity to heat, it is not clear how economical these pilots will be.

The Politics of Power

Besides the inflexibilities in the power grid described above, several idiosyncrasies of China’s power sector governance likely have a hand in spilled wind. A product of the partial deregulation that occurred between 1997 and 2002 was the establishment of “generation quotas” for coal plants: minimum annual generation outputs fixed by province loosely to recover costs and ensure a profit. Since China no longer has vertically integrated utilities, these are not true “cost-of-service” arrangements. There may be messy politics if wind cuts into the quotas of existing plants.

On top of this, decisions to turn on, up, down or off generators on the grid (collectively referred to as “dispatch”) are fragmented by region, province and locality (read here and here for excellent primers). To bring order to these competing demands, dispatch is fairly rigid and a set of bilateral contracts between provinces have been institutionalized stipulating how much electricity can be transmitted across boundaries. The primary reason for creating a wide, interconnected grid is the ability to flexibly smooth out generation and load over a large number of units, but this kind of optimization is nigh impossible without centralization of dispatch and transmission.

Targeted reforms could help deal with these hurdles to accommodating more wind. In fact, the guiding document for power sector reform published in 2002 (State Council [2002] No. 5) lays out many of them: establish a wholesale market in each dispatch region to encourage competition in generation; open up inter-regional electricity markets; and allow for retail price competition and direct electricity contracts between producers and large consumers, among others. Former head of the National Energy Administration and key arbiter during the reform process, Zhang Guobao, vividly recounts the heated discussions [Chinese] that led to this compromise.

Ten years later, most of the challenges are well-known: separately regulated retail and wholesale prices, a semi-regulated coal sector, and political fragmentation. Recently, there may be renewed interest in tackling these remaining reform objectives. Electricity price reform was listed in a prominent State Council document on deepening economic reforms in May, and NDRC has taken steps to rectify the coal-electricity price irregularities. Still broader changes will require strong leadership.

Managing the Unpredictable

Record curtailment in 2012 prompted a strong central government backlash: a suite of reports, policy notices and pilots soon followed. These were targeted at better implementation of existing regulations (such as a mandate that grids give precedence to renewables over thermal plants), additional requirements on wind forecasting and automated turbine control, and compensation schemes of coal generators for ramping services. These policies and central government pressure to better accommodate renewables appear to have had an impact: all provinces except Hebei saw an increase in utilization hours in the first half of 2013 [Chinese].

Due to the unique mix of power plants and regulation in China, typical wind integration approaches such as increased transmission are important but not sufficient. China aims to generate at least 390 TWh of electricity from wind in 2020, which is roughly 5% of total production under business-as-usual, over twice in percentage terms of current levels. This will put additional stresses on the nation’s purse and power grid. How China chooses to face these conflicts and grow its wind sector – through a combination of more investment and targeted reforms – will have unavoidable implications for the long-term viability of wind energy in the country.

Read Parts 1 and 2 in the "Transforming China's Grid" series: "Obstacles on the Path to a National Carbon Trading System" and "Will Coal Remain King in China’s Energy Mix?"

By Mark Fischetti

As Earth’s atmosphere warms, so does the ocean. Scientists have demonstrated how rising ocean temperatures and carbon dioxide levels can stress marine organisms. But a new model developed by the Massachusetts Institute of Technology reveals a surprising conclusion: If global temperature trends continue, by the end of this century half the population of phytoplankton that existed in any given ocean at the beginning of the century will have disappeared and been replaced by entirely new plankton species. “That’s going to have impacts up the food chain,” says Stephanie Dutkiewicz, principle research scientist at M.I.T.’s Program in Atmospheres, Oceans and Climate.

Rising temperatures will force all kinds of sea creatures to adjust. Tiny phytoplankton, a major food source for fish and other sea creatures, could perish as temperatures rise in an ocean region. Most at risk are the organisms in the coldest waters, which lack the resilience to adapt to warmer homes. In theory, the phytoplankton could evolve to alter their body chemistry or they could migrate elsewhere, perhaps closer to the poles. Either way, such immense change may leave species higher up the food chain unable to feed themselves.

The new model does not specify precisely how phytoplankton will respond or which fish populations might flourish or flounder, but it is sufficiently detailed to indicate that the new ocean conditions will likely lead to widespread replacement of the phytoplankton now in place. Dutkiewicz’s model accounts for 100 different phytoplankton species whereas most other models include just three or four. “With such finer resolution,” Dutkiewicz says, “we can see how significantly ecosystem structures will change.”

The results depict a complex picture. As the temperature rises, many phytoplankton produce more offspring. But less mixing occurs between deep cold waters and warm surface waters—a phenomenon known as stratification. Most nutrients that phytoplankton rely on well up from the deep, so less mixing means less sustenance for the microorganisms. Oceans at low latitudes—already considered the deserts of the sea—will provide even fewer nutrients for microorganisms, leaving even less food for the fish that feed on them.

At higher latitudes, Dutkiewicz says, higher temperatures and less mixing could force phytoplankton to stay closer to the surface, where at least some nutrients are available. More sunlight in that top layer, however, could again change the mix of micro critters. “There is a huge range in size and type of phytoplankton, which can affect the fish that graze on them,” she says.

Dutkiewicz is now beginning to lend additional realism to the model by adding more factors, such as changing levels of nitrogen and iron. Ocean acidification is also high on her list—a chemical variable that could alter competition among phytoplankton, some of which are far more adaptable to changing pH levels than others. Any of these dials on the dashboard could significantly affect the fate of whole ecosystems.

Keeley Rafter

Engineering Systems Division

Noelle Selin, assistant professor of engineering systems and atmospheric chemistry, along with Amanda Giang (Technology and Policy Program graduate) and Shaojie Song (Department of Earth, Atmospheric and Planetary Sciences PhD student), recently traveled aboard the specialized NCAR C-130 research aircraft as part of a mission to measure toxic pollution in the air.

The team participated in the Nitrogen, Oxidants, Mercury and Aerosol Distributions, Sources and Sinks (NOMADSS) project. The NOMADSS project integrates three studies: the Southern Oxidant and Aerosol Study (SOAS), the North American Airborne Mercury Experiment (NAAMEX) and TROPospheric HONO (TROPHONO). Selin’s group focuses on the mercury component.

“Mercury pollution is a problem across the U.S. and worldwide,” Selin says. “However, there are still many scientific uncertainties about how it travels from pollution sources to affect health and the environment.”

Selin and her students used modeling to inform decisions about where the plane should fly and to predict where they might find pollution. Their collaborators at the University of Washington aboard the aircraft captured and measured quantities of mercury in the air, conducting a detailed sampling in the most concentrated mercury source region in North America.

“It was really exciting to experience first-hand how measurements and models could support each other to address key uncertainties in mercury science,” Giang says.

The main objectives of this project include constraining emissions of mercury from major source regions in the United States and quantifying the distribution and chemical transformations of mercury in the troposphere.

NOMADSS is part of the larger Southeast Atmosphere Study (SAS), sponsored by the National Science Foundation (NSF) in collaboration with the National Oceanic and Atmospheric Administration, the U.S. Environmental Protection Agency and the Electric Power Research Institute. This summer, the Southeast Atmosphere Study brought together researchers from more than 30 universities and research institutions from across the U.S. to study tiny particles and gases in the air from the Mississippi River to the Atlantic Ocean, and from the Ohio River Valley to the Gulf of Mexico. The study aims to investigate the relationship between air chemistry and climate change, and to better understand the climate and health impacts of air pollution in the southeastern U.S.

Coal has been the primary fuel behind China's economic growth over the last decade, growing 10 percent per year and providing three quarters of the nation’s primary energy supply

By Michael Davidson

Coal has been the primary fuel behind China’s economic growth over the last decade, growing 10 percent per year and providing three quarters of the nation’s primary energy supply. Like China’s economy, coal’s use, sale and broader impacts are also dynamic, changing with technology and spurring policy interventions. Currently, China’s coal sector from mine to boiler is undergoing a massive consolidation designed to increase efficiency. Coal’s supreme position in the energy mix appears to be unassailable.

However, scratch deeper and challenges begin to surface. Increasingly visible health and environmental damages are pushing localities to cap coal use. Large power plants with greater minimum outputs are shackling an evolving power grid trying to accommodate new energy sources. Further centralization of ownership is rekindling decade-old political discussions about power sector deregulation and reform

This unique set of concerns begs the question: how long will coal remain king in China’s energy mix?

Read the rest at The Energy Collective...

This analysis is part of a new blog by MIT student Michael Davidson hosted by The Energy Collective on “Transforming China’s Grid.” Follow the blog here: http://theenergycollective.com/east-winds